Robots.txt File: A Beginners Guide

What is a Robots.txt File?

A robots.txt file is a text file located on a website's server that serves as a set of instructions for web crawlers or robots, such as search engine spiders.

It's designed to communicate with these automated agents, guiding them on which parts of the website are open for indexing and which should be excluded. By defining specific rules and directives within the robots.txt file, website administrators can control how search engines and other automated tools access and interact with their site's content.

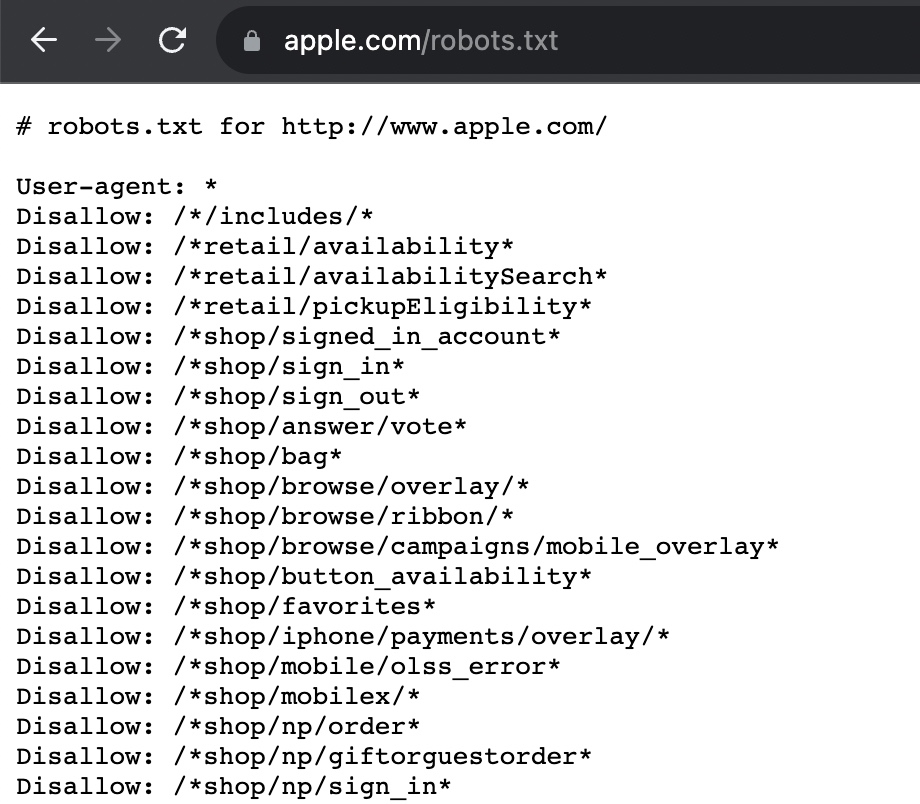

For example, below is a screenshot of Apple.com's robots.txt file. It's quite long, so we've just included the first part, but it shows how this file looks like if you were to visit it in your browser.

Why are Robots.txt Files Important for SEO?

There are many reasons why Robots.txt files are necessary and useful for your SEO:

Stop Duplicate Content Appearing in SERPs

The Robots.txt file helps prevent duplicate content issues by instructing search engine bots to avoid crawling and indexing duplicate or redundant pages that have been flagged. This ensures that only the most relevant and authoritative version of a page is displayed in search engine results pages (SERPs), improving the overall search ranking and user experience.

Important Note: Ideally, the best practice is to use canonical tags for duplicate content, rather than robots.txt, however, both result in the same outcome.

Keep Certain Website Sections Private

Robots.txt allows website owners to designate specific areas or directories as off-limits to search engine crawlers. This is vital for maintaining the privacy and security of sensitive information, such as login pages or administrative sections, ensuring that confidential content remains hidden from public search results.

Prevent Internal Search URLs from Becoming Public

To avoid cluttering search engine results with internal search results pages, which are often low-quality and irrelevant to users, robots.txt can be used to block the indexing of these URLs. This streamlines the indexing process, prevents search engines from wasting resources, and keeps search results cleaner and more user-friendly.

For example, if there is a search page example.com/search, and it’s configured to display the query in the URL (e.g. example.com/search?query=my+search+keywords) then this can lead to messy or irrelevant pages that shouldn’t be shown in SERPs.

Block Certain Files from Being Indexed (Images, PDFs, CSVs)

Robots.txt files allow webmasters to specify which types of files should be excluded from search engine indexing. By blocking files like images, PDFs, or CSVs, website owners can improve crawl efficiency and focus search engine attention on the most valuable content, potentially boosting SEO performance.

There are some instances where it may be useful to allow some types of files to be indexed, for example, if the PDFs are content pieces that are supposed to be for public consumption.

Prevent Servers From Becoming Abused

In some cases, malicious bots or excessive crawling activity can overwhelm a website's servers, leading to poor site performance and downtime. Robots.txt helps prevent server abuse by restricting access to authorized search engine bots, ensuring that server resources are used efficiently, and protecting the website's overall SEO health. It also allows for managing & optimizing the crawl budget by limiting how many pages can be crawled during a certain period of time.

Show Location of Sitemap File(s)

Robots.txt can be used to indicate the location of a website's XML sitemap(s). This assists search engines in finding and indexing all relevant pages efficiently, which is essential for ensuring that a website's content is properly represented in search results and subsequently improving its SEO visibility.

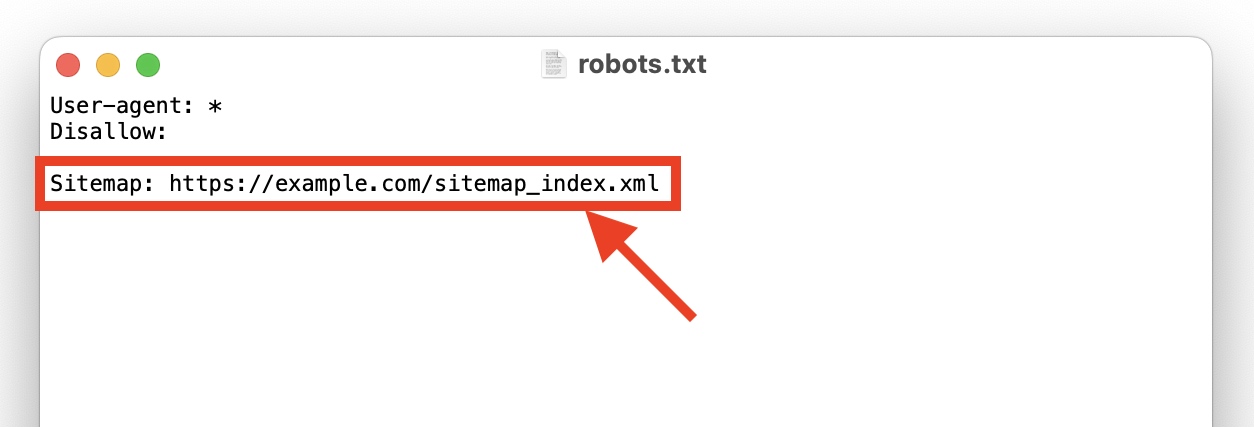

For example, you'll see a sitemap directive to the sitemap index file in the below robots.txt file:

Where Should You Put Your Robots.txt File?

To ensure that search engine crawlers can locate and read your robots.txt file, you should place it in the root directory of your website's server. The root directory is the main folder where your website's homepage (e.g. index.html) is located. Here's the typical file path:

www.example.com/robots.txt

It’s vital that you make sure that the robots.txt file is accessible via a direct URL, and there are no restrictions or password protection in place that might prevent search engine bots from accessing it. This placement allows web crawlers to easily locate and follow the instructions contained in the robots.txt file to determine which parts of your website they can crawl and index.

Robots.txt Limitations

While this essential file can help with many things when it comes to guiding crawlers, there are certain limitations as well, according to Google:

Not all search engines support robots.txt rules

While robots.txt is a standard protocol for guiding web crawlers, not all spiders fully adhere to its directives. Some search engines may choose to ignore or only partially follow the instructions provided in a robots.txt file, potentially leading to unintended indexing of restricted content.

Crawlers vary in their interpretation of syntax

Different web crawlers may interpret the syntax and directives in a robots.txt file differently. This variance in interpretation can result in inconsistencies in how search engines follow the rules, possibly leading to unexpected content indexing or exclusion, depending on the specific crawler's behavior.

A disallowed page in robots.txt can still be indexed via external links

While robots.txt can prevent search engine bots from crawling a particular page directly, it doesn't prevent external websites from linking to that page. If other websites link to a disallowed page, search engines may discover and index it through those external links, bypassing the robots.txt restrictions and potentially making the page accessible in search results.

Search Engine Bot Examples

Below are some of the most common search engine bots, so when it comes to creating your robots.txt file, you know which ones are out there, in case you want to single any out or block any:

Google Bots

Baidu Bots

Bing Bots

MSN Bots

Other Bots

Robots.txt Syntax

Learning the syntax for Robots.txt is quite easy, so let’s explore what each one means and what it does:

User-agent:

This line specifies the web crawler or user agent to which the subsequent rules or directives apply. For example, "User-agent: Googlebot" would target Google's web crawler. A potential danger is accidentally blocking all user agents by omitting a User-agent line, which would effectively prevent all search engines from crawling the specified content. By default, if you want all bots to crawl your website, make sure it’s set to “User-agent: *”

Disallow:

This directive indicates the web pages or directories that should not be crawled or indexed by the user agent mentioned earlier. It specifies the relative paths of the disallowed content. The danger here lies in overusing Disallow, potentially blocking vital pages or entire sections of your site from search engines, which can harm your search visibility.

Allow:

This rule can be used to counteract a broader Disallow rule. It allows you to permit the indexing of certain pages or directories that would otherwise be blocked by a Disallow directive. The danger is that it may not be recognized by non-Google crawlers and could lead to different behavior among search engines.

Crawl-delay:

This command sets a delay (in seconds) between subsequent requests made by the user agent to your server. It can be used to reduce server load caused by excessive crawling. The danger is that not all crawlers support this directive, and excessive delays can negatively affect your site's crawl efficiency.

Sitemap:

The Sitemap directive informs web crawlers about the location of your XML sitemap(s), which lists all the URLs you want to be indexed. There are no inherent dangers with this directive, but its misuse can occur if the provided URLs lead to non-existent or incomplete sitemaps, which can confuse crawlers or lead to incomplete indexing.

There are also two symbols that can also be used within URLs for creating more complex commands:

Star Symbol

* serves as a placeholder for any character sequence, or it can also mean all/any.

For example, Disallow: /page/* prevents crawling of any pages nested within /page/ directory, including the root page itself.

Dollar Sign Symbol

$ denotes the end of a URL string and although rarely used, it selects all pages and subdirectories within a certain directory level.

For example, Disallow: /page/$ prevents crawling of any pages nested within /page/ directory, including the root page itself, but it won’t select any subdirectories below it (or pages within those).

Example Robots.txt Directives

To understand some use cases, we’ve shared some common examples of Robots.txt directives, so you can model them if you require:

Allow all web crawlers access to all content

User-agent: *

Disallow:

Block all web crawlers from all website content:

User-agent: *

Disallow: /

Block all web crawlers from PDF files, site-wide:

User-agent: *

Disallow: /*.pdf

Block all web crawlers from JPG files, only within a specific subfolder:

User-agent: *

Disallow: /subfolder/*.jpg$

Note: URLs and filenames are case-sensitive, so in the above example, .JPG files would still be allowed.

Block a specific web crawler from a specific folder:

User-agent: bingbot

Disallow: /blocked-subfolder/

Block a specific web crawler from a specific web page:

User-agent: baiduspider

Disallow: /subfolder/page-to-block

Note: Be careful with the above one, especially if a slash is added, which could mark it as a directory instead of a single page!

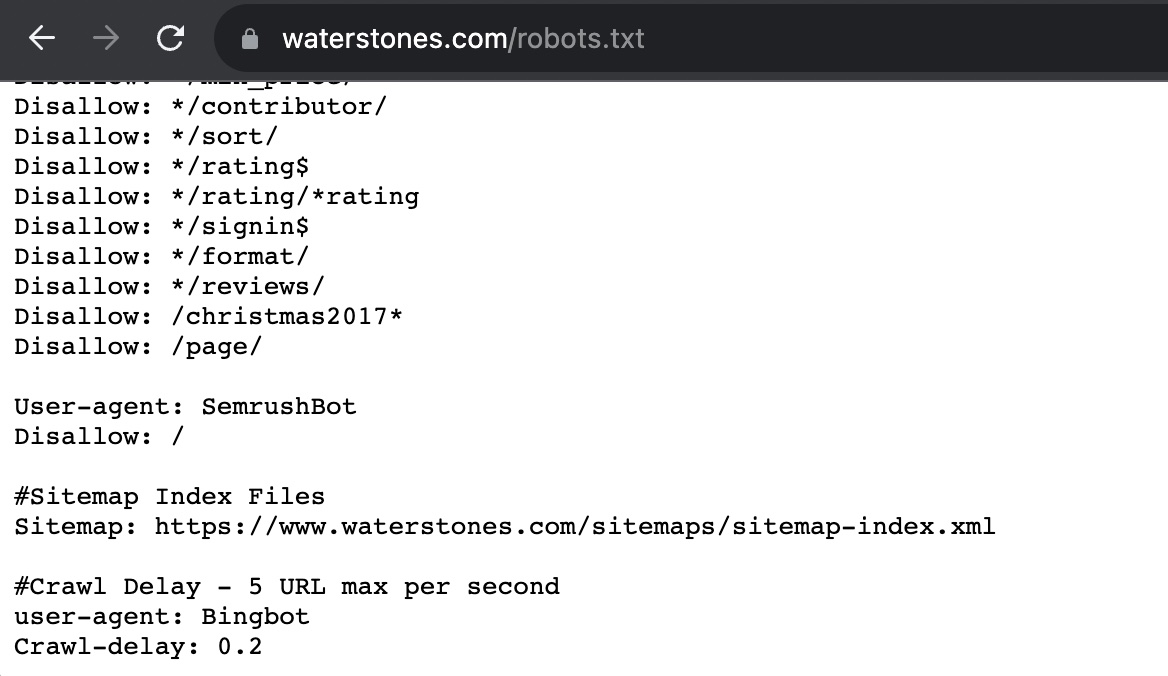

Blelow, you can see a few ways in which Waterstones.com (a UK-based bookstore chain), uses different directives. You can see it's blocking SemrushBot (an SEO platform) and it also lists its sitemap index file. Finally, it adds a crawl delay of 0.2 seconds for Bingbot specifically.

Robots.txt Best Practices

In general, the following best practices will aid in making sure robots.txt files are created and used correctly, so no unintended issues arise.

Robots.txt Testing Tool

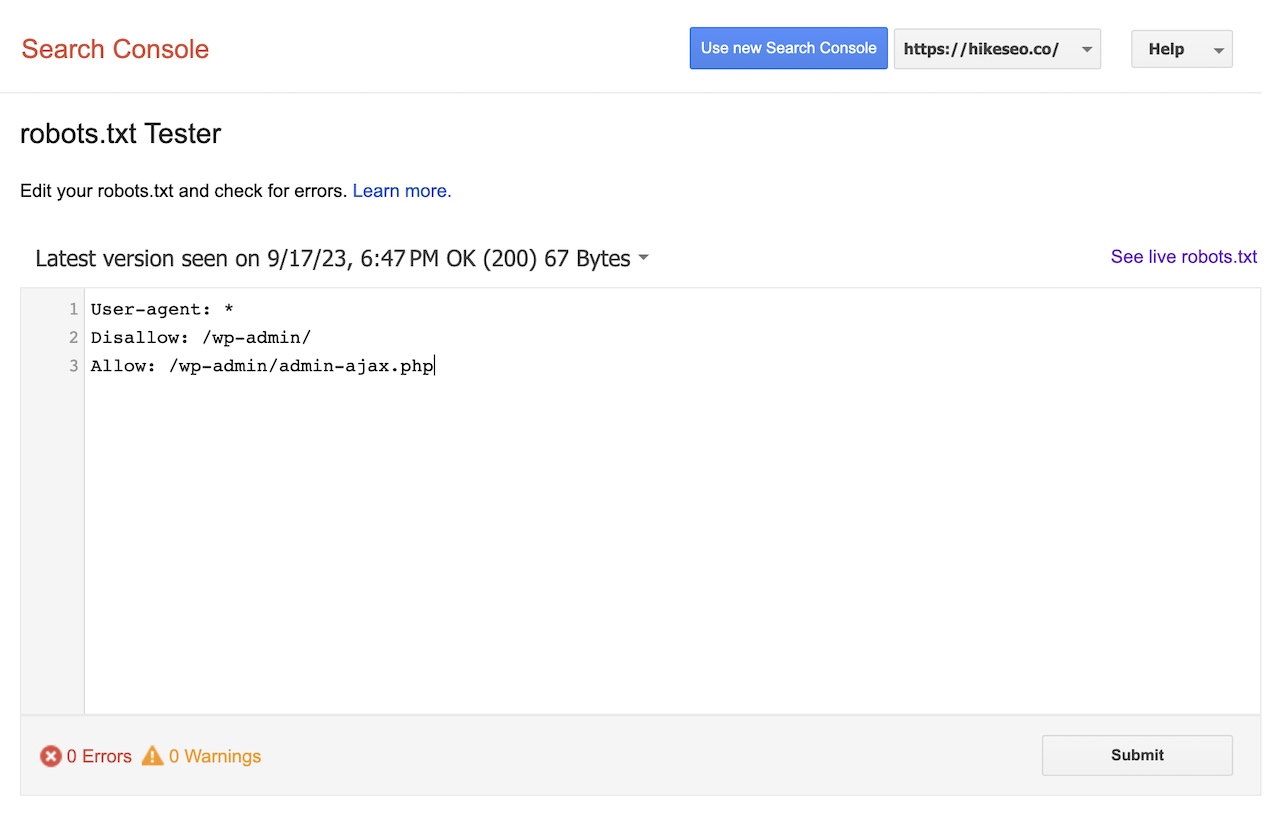

Once the robots.txt file has been created according to requirements and made live, if not already, it’s important to check to make sure it’s valid.

Google has a free tool to do just that, which can be found here:

https://www.google.com/webmasters/tools/robots-testing-tool

Simply submit a URL to the robots.txt Tester tool and it will flag up any errors or warnings.

For example, you'll see Hike's robots.txt file is error and warning-free:

Robots.txt vs. Meta Robots vs. X-Robots

In addition to the Robots.txt file, you may have already heard of meta robots and x-robots and were wondering what the differences between them are.

Firstly, Robots.txt is an actual text file, that is standalone, while meta robots and x-robots are meta directives that are located on specific pages.

The main difference between them is that Robots.txt defines site-wide or directory-wide crawl behavior (including specific pages), while meta robots and x-robots indicate indexation behavior only at the page level.

More specifically, the differences between meta robots and x-robots are as follows:

Meta Robots is an HTML meta tag that is placed within the head section of individual web pages. It allows you to specify page-specific directives for web crawlers, such as "noindex" to prevent a specific page from being indexed or "nofollow" to instruct crawlers not to follow links on that page. Meta Robots provides more granular control compared to Robots.txt.

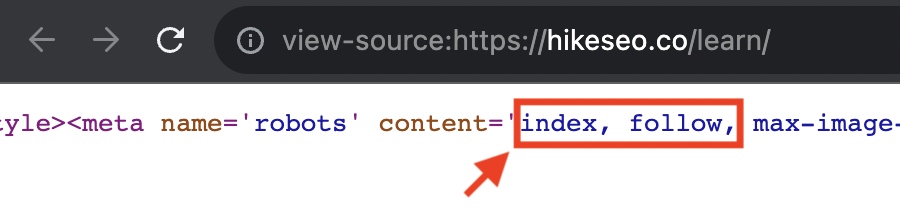

For example, on Hike's Learn SEO page, the meta robots tag has "index" and "follow", which tells search engine bots to index this page and follow all the links on this page:

X-Robots is an HTTP header that can be set at the server level or for specific web pages. It offers similar capabilities to Meta Robots but is typically used for advanced scenarios. X-Robots allows you to control how content is indexed and displayed, including specifying canonical URLs, setting page-specific "noindex" directives, or limiting image indexing. It's more flexible and powerful but may require more technical expertise to implement.

Hike SEO

When it comes to empowering agencies serving SEO beginners or small business owners, Hike SEO makes for the perfect platform to enhance organic search visibility and traffic over time. With its diverse, practical, yet simple tools, users can easily grasp what needs to be done with ease, without prior SEO knowledge.

Try Hike today, and start improving your SEO performance in easy-to-follow action steps.