Technical SEO: A Beginners Guide To Technical SEO Audits

What is Technical SEO?

Technical SEO is the process of optimizing a website's technical elements to improve its search engine ranking and visibility. Technical SEO involves optimizing a website's backend infrastructure and code to ensure that it is search engine friendly, making it easier for search engines to crawl, index, and understand the content on the site.

Why Is Technical SEO Important?

Making sure that your website is optimized technically for search engines is important for several reasons:

Improves Website Crawlability - Optimising various technical aspects of a website for SEO helps search engines crawl and index your website more easily, which can improve your website's overall visibility in search results.

Enhances Website Performance - This ensures that your website loads quickly, which improves user experience and can increase engagement.

Increases Website Security - Implementing SSL/HTTPS is an important technical SEO task that helps to protect users’ data and increase trustworthiness.

Improves Mobile-Friendliness - With most users browsing the internet using mobile devices, it's important to make your website responsive and optimized for mobile devices. This provides a better user experience which leads to increased engagement.

Increases Visibility - Adding technical elements such as structured data helps search engines better understand the content on your website and can improve your chances of appearing in rich snippets and other types of search features, leading to increased visibility.

Is Technical SEO Complicated for Beginners?

The short answer is both yes and no - several aspects are quite simple for beginners to understand and implement, while other aspects can be quite technical and may be best suited for a technical SEO specialist to tackle. That being said, platforms like Hike makes technical SEO simple by providing bite-sized and easy-to-implement actions within the available time that busy small business owners have during the week.

Three Primary Elements of a Webpage

We like the way Moz.com provides analogies for each of the three elements that make up a web page:

HTML - What It Says

Web pages all contain text that has been marked up with HTML (Hypertext Markup Language) that tags text to add information about it so it can be more easily understood by browsers and search engines.

CSS - What It Looks Like

How a website is styled is determined by the technology called CSS or Cascading Style Sheets. This technology determines how various website elements and fonts look visually.

Javascript - How It Behaves

When you interact with websites, how it behaves and many of the actions on the front end that are processed by the browser are powered by Javascript technology (not to be confused with Java, a desktop programming language). Javascript determines how elements on a webpage should behave when the user interacts with it.

Technical SEO deals with primarily the HTML layer, however, CSS and Javascript can also be involved in a few aspects that can affect SEO performance.

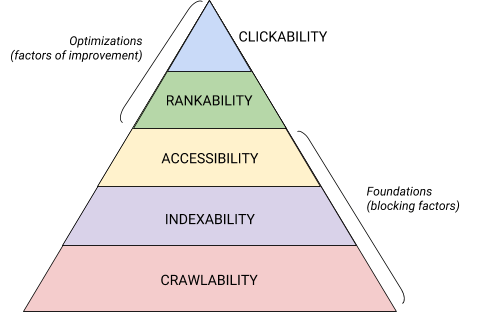

Technical SEO Hierarchy

Not all factors are created equal when it comes to SEO, so even when working on the technical SEO layers, it’s important to prioritize which aspects to tackle first.

Search Engine Land has put together this fantastic diagram of the Technical SEO Hierarchy of Needs, reminiscent of Maslow’s Hierarchy of Needs for humans.

The first and most important layer is Crawlability which means how easy is the website for web crawlers to discover pages on your website.

The next level of importance is Indexability, which means are your pages set up to be indexed or are there issues that are preventing search engines from indexing those pages?

Next is the Accessibility layer, which comes down to user experience and how easy it is to interact with the website and its content.

The first three layers are the foundations of Technical SEO and can make or break your organic search visibility.

The final two layers are Rankability and Clickability. Rankability has to do with content optimization, authority (see domain authority), credibility, and trustworthiness of the website and pages. Clickability is about how engaging or sticky is the content to attract users to the website from the search engines (high click-through rate), how long they stay on the page, and how many pages they visit during a session.

Site Structure & Navigation

How website pages are structured and how navigation is designed is a major factors as to how easily a website can be crawled by search engines and create an enjoyable user experience. Let’s explore how to optimize some of these aspects.

Shallow Structure - But Not Flat!

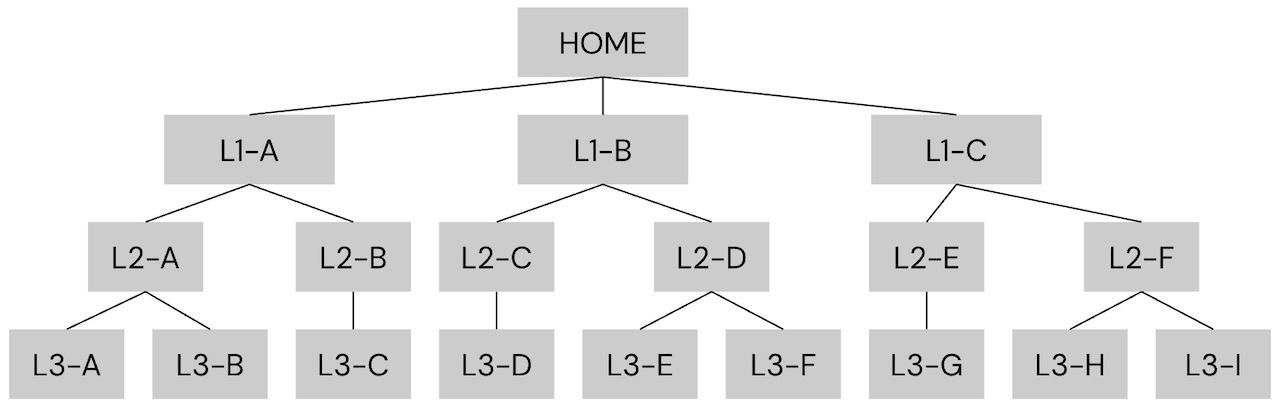

The hierarchy of the page structure of a website can be either deep (more than three layers of hierarchy), shallow (two to three layers of hierarchy), or flat (one layer of hierarchy).

Try to structure your content so it is ideally a shallow structure, meaning it has two to three layers of hierarchy, maximum. For example:

An example URL would be: www.example.com/L1-B/L2-D/L3-F/

Unless you have a very small website that doesn’t have enough content to warrant creating a hierarchy, try to avoid flat (one-level) website structures as it can confuse users and search engines if similar content isn’t grouped, and it doesn’t utilize the flow of backlink authority effectively.

Breadcrumbs

On websites, these don’t come from bread! Breadcrumbs are navigational structured link elements that help users (and search engines) navigate more easily throughout a hierarchical website page structure. These are very commonly used on Blogs or E-Commerce websites. Below is an example of a breadcrumb on the fictional page L3-F in the example structure above.

Home > L1-B > L2-D > L3-F

Users and search engines can then easily navigate to parent pages, and also see where they are relative to other content pages on the website so they can navigate to the right area efficiently.

Pagination

If you have a page with a list of links to content such as blog articles, products, or other types, then instead of having an endless list on a single page, it’s better to implement pagination. There are quite a few reasons for this:

Most CMS platforms automatically have pagination built into their lists, but it’s important to ensure not too many items are loaded at once per page - in general, a common practice is to use between 10 to 30 items per page, but this can vary depending on page load performance and user behavior.

Consistent URL Structure

Some elements to consider to optimize your URL structure and make it consistent across your website:

Logically Organised

It’s important to group similar pages together into a content cluster so that it gives context to search engines to understand what the pages within that cluster are about and helps users to navigate through similar content more efficiently. This also creates an ideal situation for linking between these similar pages, increasing crawl efficiency.

Easy For Users To Navigate

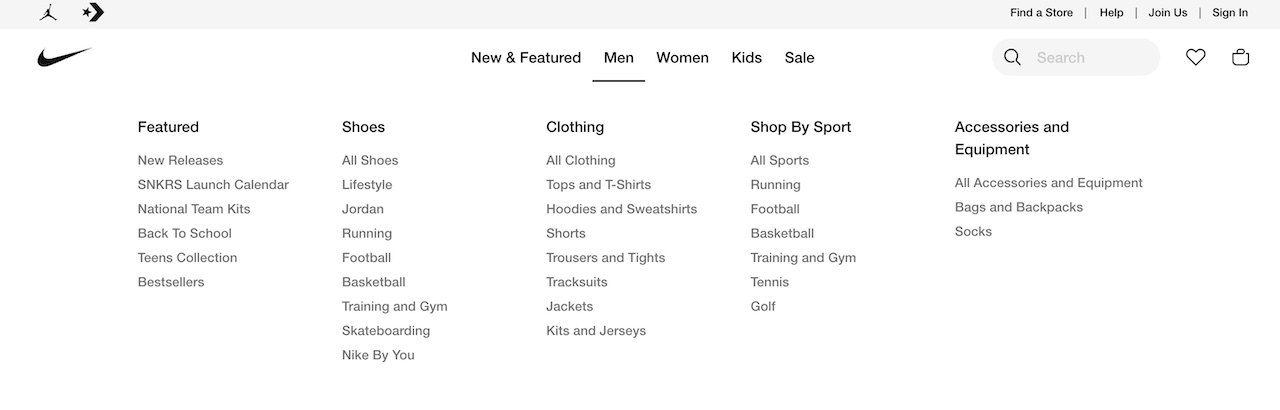

The website menu and footer links should be structured to match the structure of the website, so users can easily navigate through content without feeling overwhelmed or lost. For category-level pages, it makes sense to have sub-menus to allow users to discover content within those categories faster.

For example, here are the category pages on the nike.com website, each with their own set of sub-menus that narrow down the content of the categories:

Crawling, Loading, Indexing

How efficiently a website is designed for search engine bots to crawl will influence how quickly it will be crawled. The speed at which web pages load to search engines and users, also is an important crawling and user experience factor. Finally, how indexable a website is determines which pages show up in the search results. These technical aspects go a long way to improving your SEO. Let’s explore each one in more detail.

Identifying Issues in GSC

Go to the "Coverage" report in Google Search Console and check for crawl errors. This report will show you any pages that Google was unable to crawl or that have issues that prevent Google from indexing them.

In the "Index" section of the Google Search Console, you can check the number of pages indexed by Google. If the number of indexed pages is lower than the number of pages on your site, there may be crawling or indexing issues.

The URL inspection tool in Google Search Console allows you to check the status of a specific page on your website. You can use this tool to see if Google can crawl and index a page, and if any issues need to be fixed.

In the "Crawl Stats" section of Google Search Console, you can see how often Google is crawling your website. If the crawl rate is low, there may be issues that are preventing Google from crawling your site effectively.

Crawl Your Website

Although GSC automatically will crawl your website and identify certain issues, dedicated crawling software is much faster and can highlight any issues like pages that are noindexed, lead to a 404, have a 302 temporary redirect, or simply load too slowly. A popular tool is Screaming Frog, which is free up to 500 URLs, but you can also just get Hike to automatically crawl your website and flag any issues for you in a list of actions that you can easily tackle and tick off one by one. Read more about web crawling and search engine indexing.

XML Sitemap

An XML sitemap is an essential file that should be on your website if you want Google and other search engines to easily crawl and index your website. Most CMS platforms have this built-in or at least have SEO plugins that add this functionality to it, which automatically update when pages are added, modified, or deleted.

Robots.txt

The robots.txt file contains rules as to how search engine bots should crawl a website. It also should have a link to your XML sitemap at the beginning, before the rules. Some of the rules include how quickly web crawlers can crawl the web pages (crawl rate) as well as access restrictions to prevent spiders from crawling certain file types, directories, or specific pages. You can also configure individual pages using the robots meta tag.

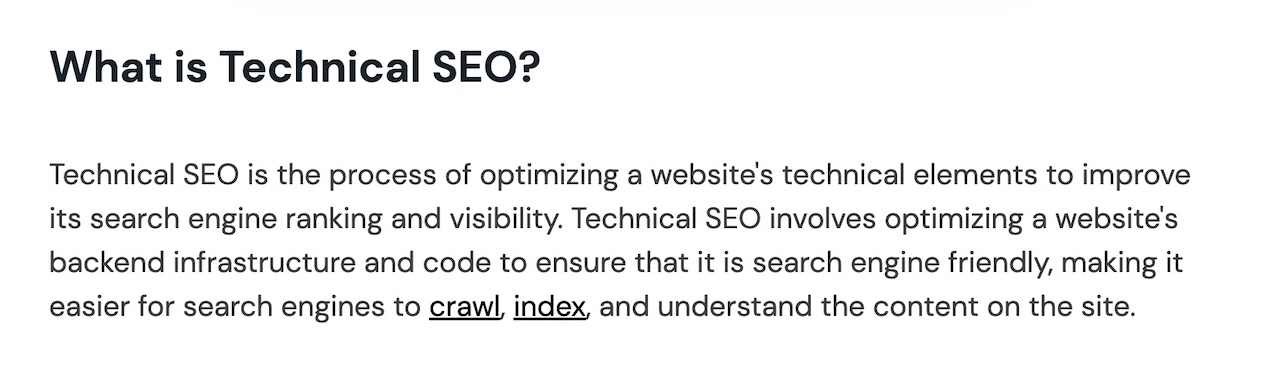

Internal Linking

Linking between pages on a website (internal linking) allows web crawlers to discover new pages as well as build a map of relationships between page topics, which adds further context & distributes page authority between related pages, which helps increase the ranking and visibility of those website pages.

Aim for at least 2-3 internal links at minimum in each piece of content that links to pages with related topics.

Below is an example of internal linking from the first paragraph of this very article, with links on the anchor text 'crawl' and 'index' that link to other articles dedicated to those topics:

In hierarchical page structures, breadcrumbs naturally provide this internal linking between parent and child pages, but it’s also important to cross-link between pages on the same hierarchy level.

Internal linking also improves user experience by giving users the ability to discover additional relevant information on linked topics.

Discover & Fix Dead Links

Broken links (404 pages) create a negative experience for users on a website, so it’s important to discover any and fix them with 301 redirects to the next most relevant page. You can use GSC to flag up any 404s or use a crawling tool to discover any broken links on your website. Discover what the difference is between 301 vs 302 redirects.

Another important thing to check for is inbound links from external websites to pages on your website that don’t exist. These pages may have been deleted a long time ago and forgotten, but other websites may not have known that and continue to link to a resource that doesn’t exist.

Looking at the landing page traffic data within Google Analytics allows you to spot non-existent pages users are landing on that can be redirected to reclaim this otherwise lost traffic.

Another important thing to consider is if you are planning to or already have completed a website migration, to make sure to check for any 404 issues along with other potential aspects that could affect your SEO.

If you use a CMS like WordPress or Shopify, you can explore how to do redirections using WordPress or how to implement redirects in Shopify.

Fix Redirect Chains

When URLs get redirected, and then those new pages get redirected again, it can cause a chain of redirects from the original page. These redirect chains have negative influences on SEO, such as:

To fix redirect chains, all you need to do is cut out the redirects in the middle of the chain, so the first URL redirects to the last URL.

Duplicate or Thin Content

Google is all about providing the highest quality user experience, and that includes serving up web pages that provide high-quality content, which includes unique, useful, original content that is focused on providing value for the user and not solely for the search engine.

Duplicate content is any content across websites or within a website that is the same or very similar. This causes a lower quality experience for users as they may already have come across that content and there’s no added value in coming across the same content on a different page.

Thin content is very minimal to no content on pages, for example, on category pages, or on e-commerce pages that don’t have descriptions on products.

Both are issues that need to be avoided, whether the duplicate or thin content is intentional or created automatically by how the technology is configured. Here are a few ways to avoid these two issues:

One Unique Topic Per Page Rule

Whenever a new page or article on your website is created, ask yourself: Do I already have a page or article that talks about this topic? If not, then it’s OK to create a new page. If you do, then it’s important to make it different enough so it warrants its page. If there are multiple pages about the same topic or topics are closely enough related to be considered the same, then Google and other search engines will be confused as to which one is the authority on the topic, and won’t know which one to rank in the search engines, which will cause keyword cannibalization, which hurts rankings. If you can’t avoid having two or more pages with the same content or similar topics, then this is where canonical tags come into play.

Canonical Tags

In addition to user-created duplicate content, duplicate content can be caused by technical phenomena such as:

If there is duplicate content, then one of those duplicates needs to be chosen as the canonical page that will be designated to be returned in the search results. The rest of the duplicate pages point to that canonical page, so search engines know. For all other pages that don’t have duplicate content, it’s best practice to have self-referencing canonical tags just in case the above three technical phenomena occur.

Noindex Pages

Another way to have duplicate content without affecting SEO is to noindex the duplicates so Google and other search engines know that these are not intended to be indexed. However, it’s always important to use canonicals as the primary method for flagging and preventing duplicate content.

Avoid Thin Content

To avoid thin content, every page must have some form of useful content on it, even if it’s a category page. Even if it’s 100-150 words describing what the page is about, that will help users navigate through the website and search engines to understand the page.

Page Loading Speed

The speed at which a website page loads is important on several levels, primarily for user experience. If a page takes too long to load, the user will become frustrated or impatient and leave within a few seconds, which is known as a bounce. That’s why pages should ideally load within 1-3 seconds maximum to ensure users can access the content they want quickly.

Another reason for increasing page loading speed is that web crawlers have certain requirements as to how long they will wait for a page to load before skipping it and moving on to the next page. Therefore, slow-loading web pages can cause crawling issues on the website.

Let’s explore some of the common factors that affect page loading speed.

Optimise Images

One of the most common problems that affect page loading speed is unoptimized images, which means they might be in a format that isn’t ideal for the web, have too high a resolution, or aren’t compressed enough to lower the file size. Ensuring that images are optimized before uploading them to the website is an important practice to establish, and that can start with optimizing existing images on the website.

Optimise Page Size

There are many other page speed factors, but another one that affects page size other than images or media is local scripts. If you use a CMS like WordPress, then too many plugins can cause the website pages to increase in size because of the extra CSS and Javascript files that may accompany them. The same goes with the website theme - if it’s a “heavy” theme with lots of bells and whistles, then the CSS and Javascript files may increase the overall page sizes.

Use a CDN

If your website is hosted on a single web server, then the page loading speed can vary based on where in the world the user is accessing the website. To improve this speed, along with a host of other security benefits, a CDN or Content Delivery Network is used which is a system where the website pages are stored across a network of multiple servers worldwide to improve loading speed, media optimizations, and security for websites. If you’re using a managed CMS like Wix, Squarespace, WordPress.com, or another non-self-hosted CMS, then this functionality is already built in.

Reduce 3rd Party Scripts

Every time a script is added to your website, whether it’s for tracking, data, or other purposes, it adds more requests to the page load, decreasing the page speed. Therefore, it’s important to be highly selective as to which 3rd party scripts to install on the website, or if needed, then use a tag manager like Google Tag Manager that allows you to add or manage them from a single dashboard.

A great way to decrease file size of scripts is using minification which basically compresses the code by removing spaces and line characters.

Other Technical SEO Aspects

Finally, we come to the other technical SEO aspects that don’t fall within any of the categories previously mentioned.

Hreflang for International Sites

If your website content has more than one language or is targeting more than one country or region, then it’s important to make sure that hreflang tags are implemented on the website to let search engines know which language and country each page is associated with. Otherwise, this could cause duplicate content issues.

404 Links

Dead links or 404 links are links that point to a page that either does not exist anymore or has been recently unpublished or deleted. 404 links create a negative user experience for visitors, frustrating them since they can’t find the content they thought they would find. Therefore, it’s vital that 404 not found errors are fixed by redirecting them to the next more relevant page.

Structured Data

Another aspect of technical SEO is structured data - this includes types such as Schema.org, OpenGraph tags, and other formats. Structured data is information about information and tells search engines what a piece of information is about, allowing it to be pulled directly into the search engine for enhanced results (rich snippets). Examples include reviews, an organization or person’s name, address, phone number, and many others. Without structured data, it makes it more difficult for search engines to find useful & quick information that users are expecting in the search results.

XML Sitemap Validation

Although most CMS platforms have automatic XML sitemap creation either natively built-in or through third-party plugins, if your website has a custom-created XML sitemap, it’s important to validate it so it’s following the standard requirements. Here’s a useful XML sitemap validation tool you can use.

Noindex the Tag and Category Pages in WP

If you use WordPress as your CMS, then chances are you may be using the tag or category pages, which often create more clutter than are useful. These default pages often don’t have any information on them other than a list of matching blog articles. Therefore to prevent possible duplicate content or simply clean up search results from pages that won’t be useful to the search user, it’s good practice to noindex these pages. Plugins like RankMath or YoastSEO have settings to noindex these types of pages from day one.

Page Experience Signals

Another category of technical SEO aspects includes page experience signals which are specific aspects of a website that directly affect a user’s experience. Below are three of them:

Avoid Intrusive Pop-Ups (Interstitials)

Nobody likes to land on a website with annoying popups or ads that they need to close to be able to continue reading the content they came to the website for. Avoid using intrusive popups that annoy users or interrupt their experience on the page, if optimizing for search engine traffic is your goal. It doesn’t mean you can’t have ads or lead capture forms, but they should not interfere with the user’s experience. For example, you could add a floating bar at the bottom, or embed a call to action every 500 words of content.

Mobile Friendliness

How well a website is optimized for, performs, and looks on mobile devices is important since most users browse the web on their mobile devices over a desktop device. Make sure that your website design is responsive and adjusts based on the device screen size, loads fast on mobile devices that may use slower networks, and looks good on smaller and vertical mobile screens. Using a mobile-friendly test will help flag up any potential issues.

HTTPS

Data privacy and security are more important than ever before today, especially with EU laws such as GDPR and other countries developing regulations on how data is collected, processed, and stored. Keeping users’ information secure when they submit sensitive data is critical, and therefore, it’s critical to use HTTPS (SSL) protocol over the outdated HTTP version. SSL essentially encrypts the data transfer between the browser and the server, making it virtually impossible to intercept, and keep users’ data safe in transit. This builds trust with the user and this is exactly what Google also wants for its users when they land on websites.

Top-Level Domains

Another technical aspect is the type of domain that is chosen. These are called top-level domains and can affect how easily remembered your website is or not. It's also important to consider if your business targets multiple languages and/or countries (international SEO), as you may have to decide between ccTLDs and gTLDs.

Hike + Technical SEO

Technical SEO is a large subject consisting of many factors, some of which can be quite technical, however, it doesn’t have to be. By creating a Hike account, small businesses can take the complicated out of discovering and fixing technical SEO issues.

Hike automatically flags these as actions within your account, and gives helpful yet simple guidance on why the aspect is important, how to fix it, and how long it will take. Yes, it’s really that simple! Of course, there always may be one or two items that you may need a hand with, but we are here to help and support you along the way. Sign up to Hike and begin improving your Technical SEO today.